Latest

Employees Fear Prison Time as Leaked Docs Show X-Rated Website Seemingly Hosted Sinister Content for Years

A recently leaked set of internal documents has sparked anxiety among employees at a popular adult website. The revelations suggest the platform may have unknowingly hosted disturbing content for an extended period.

Leaked internal documents from Pornhub have revealed that the platform knowingly hosted child sexual abuse material (CSAM) for over a decade, according to discovery records mistakenly released during a major class action lawsuit in Alabama. The revelations have sent shockwaves through legal and advocacy communities, shedding light on what appears to be a long-standing and systemic failure to remove illegal content, even when employees were aware of its presence on the site.

The documents—composed of internal emails, chat logs, memos, and keyword tracking reports—show employees discussing how thousands of videos depicting child abuse were allowed to remain online, in some cases for over ten years. In one instance, a staffer joked about discovering a child pornography video from 2009 that had remained accessible on the site until 2020. Another employee admitted forgetting to delete an illegal video from their computer and acknowledged that possessing it could result in serious prison time. The employee’s colleague responded casually, joking that the company, then called MindGeek, would “vouch for us if we ever get arrested.”

These exchanges, while chilling, form just part of a larger narrative presented by the lawsuit, in which victims of child sex trafficking accuse Pornhub and its parent company of profiting from their abuse. The discovery materials suggest that moderation practices were grossly inadequate. More than 700,000 videos had reportedly been flagged by users for potential violations, including depictions of minors. Yet internal emails confirm that moderators only prioritized content that had been flagged at least 15 times—leaving countless potentially illegal videos untouched.

Keyword tracking documents show an overwhelming presence of abusive material. Terms like “12yo,” “13yo,” “7yo,” and “girls under18” yielded hundreds of thousands of results in Pornhub’s search system. Despite these figures, terms such as “childhood” and “forced”—appearing in over half a million video descriptions—were not recommended for banning. The word “teen,” often a top search term, remained heavily promoted across the platform.

Executives were reportedly conflicted about how to handle the problem. In one 2017 email, a company leader suggested blocking the misspelled word “rapped” (a likely variation of “raped”) but allowing “young girl” to remain searchable. Other emails show staff being instructed not to copy higher-ups when reporting CSAM findings, suggesting an internal effort to shield management from liability or scrutiny.

Following a high-profile New York Times investigation in late 2020 that exposed Pornhub’s handling of child abuse material, the company took significant public-facing steps. Over 10 million videos were purged from the platform, and Pornhub announced a series of reforms, including banning unverified users from uploading content, removing the video download option, and partnering with nonprofit organizations focused on online safety.

Now operating under the name Aylo, the company also claims to have implemented a biometric identity verification system for uploaders and introduced digital fingerprinting tools designed to prevent re-uploads of banned content. Additionally, a banned keyword list—covering over 60,000 terms in multiple languages—was reportedly introduced to reduce access to abusive content. Despite these changes, critics argue that the harm done during the years of inaction is irreversible.

Laila Mickelwait, founder and CEO of the Justice Defense Fund and a prominent advocate for trafficking survivors, released a strongly worded statement in response to the leak. “This was systemic criminal conduct—monetized sexual abuse on an industrial scale, driven by willful corporate decisions,” she said. “These newly released documents confirm what survivors have long known: Pornhub executives knew they were distributing child rape—and they chose profits over children’s lives.”

Legal experts expect more explosive details as the Alabama class action lawsuit progresses, with additional discovery materials slated for release in the coming months. A similar case is also underway in California, where emails show the same lax moderation thresholds were in place for years. Both lawsuits could have major implications for how tech and adult content platforms are held accountable for user-generated content and the systems they use—or fail to use—to protect the vulnerable.

Scroll down to leave a comment and share your thoughts.

-

Latest6 months ago

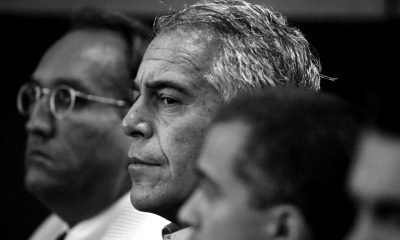

Latest6 months ago𝗔𝗹𝗹 𝗼𝗳 𝘁𝗵𝗲 𝗻𝗮𝗺𝗲𝘀 𝗺𝗲𝗻𝘁𝗶𝗼𝗻𝗲𝗱 𝗶𝗻 𝘁𝗵𝗲 𝗻𝗲𝘄 𝗝𝗲𝗳𝗳𝗿𝗲𝘆 𝗘𝗽𝘀𝘁𝗲𝗶𝗻 𝗱𝗼𝗰𝘂𝗺𝗲𝗻𝘁𝘀.

-

Latest8 months ago

Latest8 months agoParis Hilton and Kanye Connected? “They Held Me Down, Spread My Legs…” [WARNING: Graphic]

-

Latest7 months ago

Latest7 months agoHistoric Verdict Rocks America — Donald Trump Officially Convicted in a Turning Point No One Saw Coming

-

Latest8 months ago

Latest8 months agoAlex Jones Exposes What’s Going On With Dan Bongino

-

Latest5 months ago

Latest5 months agoProminent Republican Politician SWITCHES To Democrat Party

-

Latest5 months ago

Latest5 months agoBREAKING: Supreme Court Responds to Gov. Greg Abbott’s Emergency Petition to REMOVE Runaway Democrat Leader

-

Latest5 months ago

Latest5 months agoBOMBSHELL: President Trump Confirms Joe Biden Dead Since 2020!

-

Latest6 months ago

Latest6 months agoBREAKING: President Trump drops a new message for America — and it changes everything.